This is the first of a five-part series I'm writing about redefining human creativity in the age of AI.

I want to help save our idea of human creativity.

Artificial intelligence can write, illustrate, design, code, and much more. But rather than eliminating the need for human creativity, these new powers can help us redefine and expand it.

We need to do a technological dissection of language models, defining what they can do well—and what they can’t. By doing so, we can isolate our own role in the creative process.

If we can do that, we’ll be able to wield language models for creative work—and still call it creativity.

To start, let’s talk about what language models can do.

The psychology and behavior of language models

The current generation of language models is called transformers, and in order to understand what they do, we need to take that word seriously. What kind of transformations can transformers do?

Mathematically, language models are recursive next-token predictors. They are given a sequence of text and predict the next bit of text in the sequence. This process runs over and over in a loop, building upon its previous outputs self-referentially until it reaches a stopping point. It’s sort of like a snowball rolling downhill and picking up more and more snow along the way.

But this question is best asked at a higher level than simply mathematical possibility. Instead, what are the inputs and outputs we observe from today’s language models? And what can we infer about how they think?

In essence, we need to study LLMs’ behavior and psychology, rather than their biology and physics.

This is a sketch based on experience. It’s a framework I’ve built for the purposes of doing great creative work with AI.

A framework for what language models do

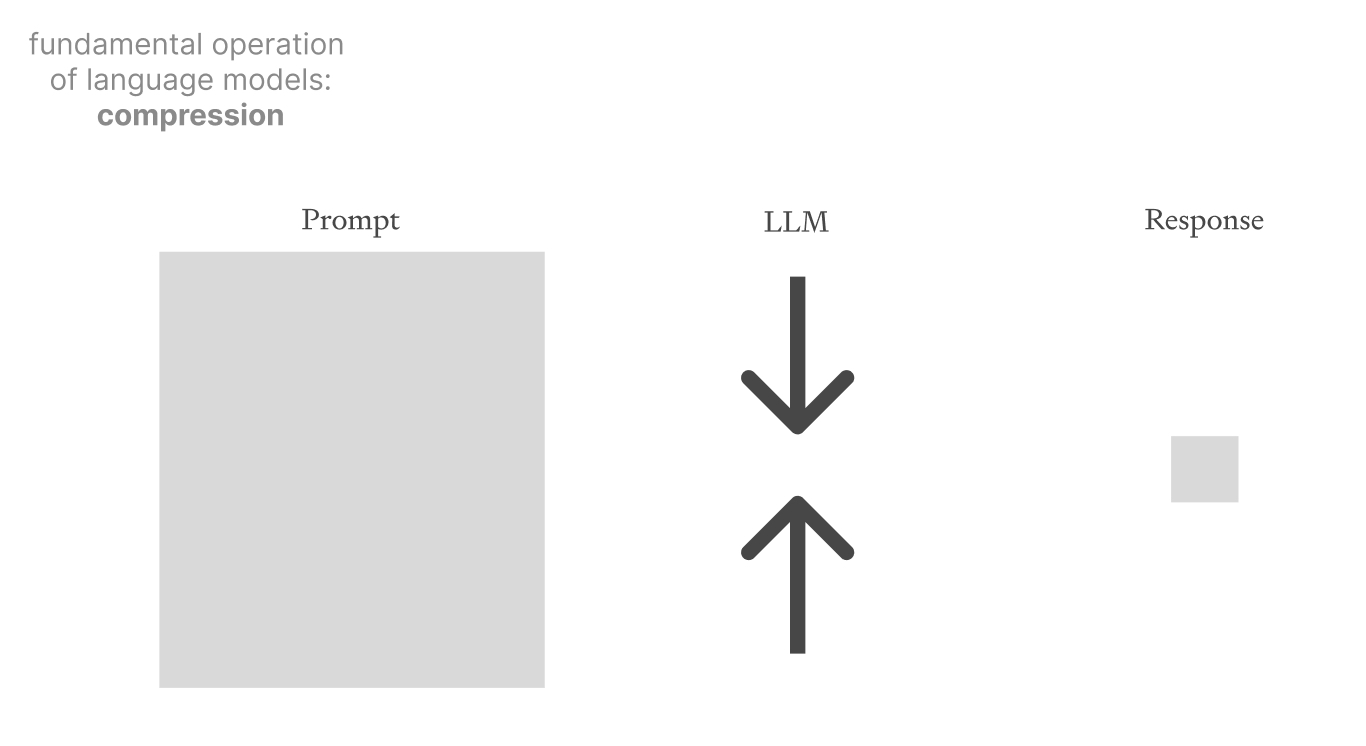

Language models transform text in the following ways:

- Compression: They compress a big prompt into a short response.

- Expansion: They expand a short prompt into a long response.

- Translation: They convert a prompt in one form into a response in another form.

These are manifestations of their outward behavior. From there, we can infer a property of their psychology—the underlying thinking process that creates their behavior:

- Remixing: They mix two or more texts (or learned representations of texts) together and interpolate between them.

I’m going to break down these elements in successive parts of this series over the next few weeks. None of these answers are final, so consider this a public exploration that’s open for critique. Today, I want to talk to you about the first operation: compression.

Language models as compressors

Language models can take any piece of text and make it smaller:

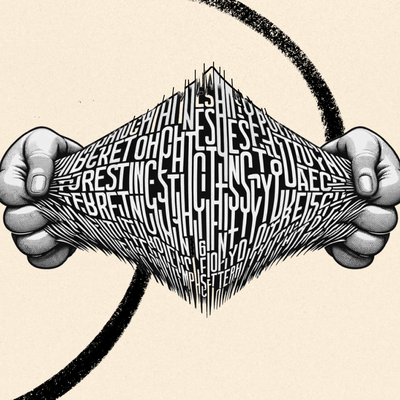

Source: All images courtesy of the author.This might seem simple, but, in fact, it’s a marvel. Language models can take a big chunk of text and smush it down like a foot crushing a can of Coke. Except it doesn’t come out crushed—it comes out as a perfectly packaged and proportional mini-Coke. And it’s even drinkable! This is a Willy Wonka-esque magic trick, without the Oompa Loompas.

Language model compression comes in many different flavors. A common one is what I’ll call comprehensive compression, or summarization.

Language models are comprehensive compressors

Humans comprehensively compress things all the time—it’s called summarization. Language models are good at it in the same way a fifth grader summarizes a children’s novel for a book report, or the app Blinkist summarizes nonfiction books for busy professionals.

This kind of summarizing is intended to take a source text, pick out the ideas that explain its main points for a general reader, and reconstitute those into a compressed form for faster consumption:

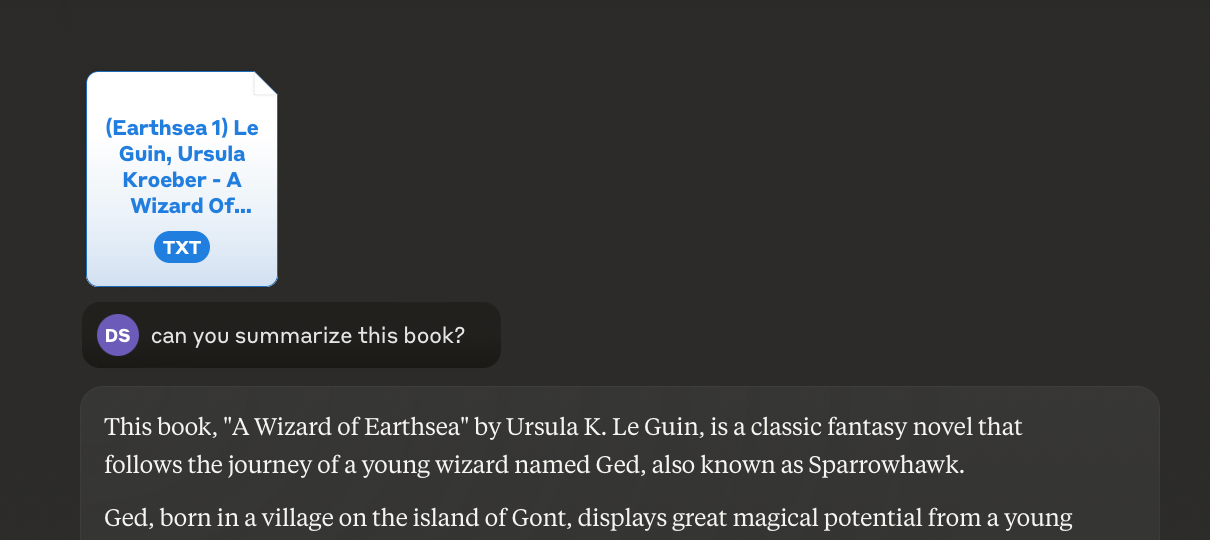

These summaries are intended to be both comprehensive (they note all the main ideas) and helpful for the average reader (they express the main ideas at a high level with little background knowledge assumed).In the same way, a language model like Anthropic’s Claude, given the text of the Ursula K. LeGuin classic A Wizard of Earthsea, will easily output a comprehensive summary of the book’s main plot points:

But comprehensive compression isn’t the only thing language models can do. You can compress text without being comprehensive—which creates room for totally different forms of compression.Language models are engaging compressors

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!

Was a bit skeptical at first but blown away by the end. Great stuff.

@german88castillo thanks!!

Incredible writing Dan ;-)

I loved the way you re told the GenAI story in your own authentic voice. I am with you in that it helps one dig deeper when it comes to collaboration creativity & LLM’s.

I find that as I interact with Claude, and iterate on the output, the best of what I want to say, eventually does come out.

Thank you for making my Saturday morning.

@nicky_5977 so glad you enjoyed it! Thanks for reading

Really helpful in thinking about how to use LLM’s to understand complex works that I want to read, but don’t have time.

Dan this was so good.

Not just because I loved The Wizard of Earthsea as a teenager, but because you've written so beautifully about the return of nuance.

More articles explaining the meaning of the AI wave in non-tech-terms are needed. This is important!

Great piece. Effective illustration. Look forward to the other parts.

Thanks for this inspiring article and the really to the point visualizations. Placing LLMs and the way they help with information transformation as a step of a cultural evolution really opened a next level perspective to me. Also the classification into compression-expansion-translation made me reflect on my day-to-day use cases of AI. Great writing. Looking forward to the next one in that series.